In the world of robotics, the ability to follow a human or another robot is a fascinating achievement that combines several technological disciplines. But how do follow robots actually work? What makes them capable of autonomously trailing a moving target? In this blog, we’ll delve into the science behind follow robots, explore the challenges they face, and break down the four key technological components that allow these robots to follow effectively. Whether you’re looking to develop a follow robot or simply curious about the technology, this article will provide you with valuable insights.

The Core of Follow Robots: Two Simple Questions

At the heart of a follow robot’s functionality are two main questions:

- Where is the target?

- How can the robot move to follow the target?

However, achieving this requires more than just basic movement. Follow robots need to tackle challenges such as obstacle avoidance, navigation, and real-time decision-making. Let’s dive deeper into how robots achieve these goals and make intelligent decisions in dynamic environments.

Two Main Approaches: “Listening” vs. “Looking”

To understand how follow robots track their targets, we can break down the two primary methods: listening and looking. These methods are as different as they sound, with each offering its own set of advantages and challenges.

Listening: Signal-Based Tracking

When we talk about “listening,” we are referring to the robot’s ability to follow based on signals such as Bluetooth or radio frequencies. The idea is simple: the robot listens for the signal strength, which helps it determine how far away it is from its target. The stronger the signal, the closer the target is.

However, signal-based tracking has its limitations. The main challenge lies in determining the direction and distance accurately. In Bluetooth-based systems, for instance, the robot uses triangulation to find its position. This process involves multiple sensors (A and B) positioned at known points, and a third sensor (C) that follows the target. The robot continuously switches connections between these sensors, measuring the signal strength at each point to estimate the target’s position.

While this method works in many situations, it’s far from perfect. Signal interference, fluctuations in signal strength, and other environmental factors can make it difficult to pinpoint the target’s exact position, making this system more prone to errors and unreliable in dynamic or crowded environments.

Looking: Vision-Based Tracking

In contrast, vision-based tracking relies on cameras, sensors, and recognition algorithms to track the target. Unlike signal-based systems, vision doesn’t require the robot to rely on fluctuating signals. Instead, it simply “sees” the target and follows it.

The most common method here is face recognition, which allows the robot to track a person by identifying unique facial features. Another popular approach is feature recognition, where the robot follows visual markers, such as specific colors or patterns. This method does not suffer from the same signal-based issues and can provide more accurate tracking in many scenarios.

However, vision-based tracking comes with its own set of challenges. For one, it’s heavily dependent on the environment’s lighting and the presence of obstructions. A camera’s view can be blocked or distorted, making it harder for the robot to track the target. Additionally, vision-based systems require powerful hardware and software, which increases complexity and cost.

Key Components of a Follow Robot

Now that we have a basic understanding of how robots can follow using signals or vision, let’s explore the four critical technological modules that make follow robots effective in real-world applications.

1. Target Localization Module

The first essential function of a follow robot is knowing where the target is. There are two main approaches for localization: sensor-based and vision-based.

- Sensor-based localization (using technologies like LiDAR, ultrasonic sensors, or RFID) helps the robot determine the target’s position by measuring distance and angle. It works well in many situations because sensors can provide accurate and reliable data. However, this method alone doesn’t account for obstacles, so additional techniques are needed.

- Vision-based localization (using cameras, depth sensors, or even body motion tracking) allows the robot to identify the target based on visual information. This method offers richer data, including spatial orientation and movement, but it’s more complex and computationally demanding.

Both methods have their strengths, and many advanced robots combine these techniques to improve accuracy and adaptability.

2. Obstacle Recognition Module

Once the robot knows where the target is, it must avoid obstacles that might obstruct its path. This is where the obstacle recognition module comes into play.

Common technologies used for obstacle detection include:

- Ultrasonic sensors, which emit sound waves and measure their return time to detect nearby objects.

- LiDAR sensors, which use laser pulses to create a 3D map of the environment and detect objects at various distances.

- Infrared sensors, which can detect temperature differences and identify obstacles in the robot’s path.

The robot continuously scans the environment and adjusts its path to avoid collisions while maintaining its focus on following the target.

3. Dynamic Path Planning and Obstacle Avoidance

Path planning is the brain of the robot’s movement system. In dynamic environments where both the robot and the target are constantly moving, it’s essential for the robot to calculate and adjust its route in real-time.

This process involves creating a map of the environment and dividing it into a grid of navigable spaces. The robot uses this map to plan its movements, adjusting its course to avoid obstacles and reach the target. Sophisticated algorithms enable the robot to optimize its route, accounting for changes in the environment as the robot moves forward.

While dynamic path planning can be computationally expensive, it’s necessary for ensuring that the robot follows the target smoothly without running into obstacles.

4. Robot Mobility Module

Finally, the robot mobility module is responsible for executing the movement commands. This includes controlling the robot’s wheels, servos, and other actuators to navigate the environment. A good mobility system ensures that the robot can move efficiently, with precise control over speed, direction, and positioning.

A well-designed mobility module also integrates feedback from the localization and obstacle avoidance systems, ensuring that the robot follows the target accurately while avoiding collisions.

Challenges and Future of Follow Robots

Despite the impressive advancements in follow robot technology, several challenges remain. Signal-based systems can suffer from interference, and vision-based systems depend heavily on environmental conditions. Additionally, robots need to balance their tracking capabilities with the ability to handle obstacles, navigate complex environments, and adapt to dynamic situations.

However, with advancements in AI, machine learning, and sensor technologies like LiDAR, the future of follow robots looks promising. These robots are already being used in various industries, from logistics and warehouses to healthcare and retail, and their capabilities are only expected to improve as technology evolves.

Conclusion

Follow robots represent an exciting frontier in automation. By combining localization, obstacle detection, dynamic path planning, and mobility, these robots can efficiently and safely follow a target in dynamic environments. As the technology continues to evolve, we can expect even more sophisticated and reliable follow robots that will have broad applications in industries worldwide.

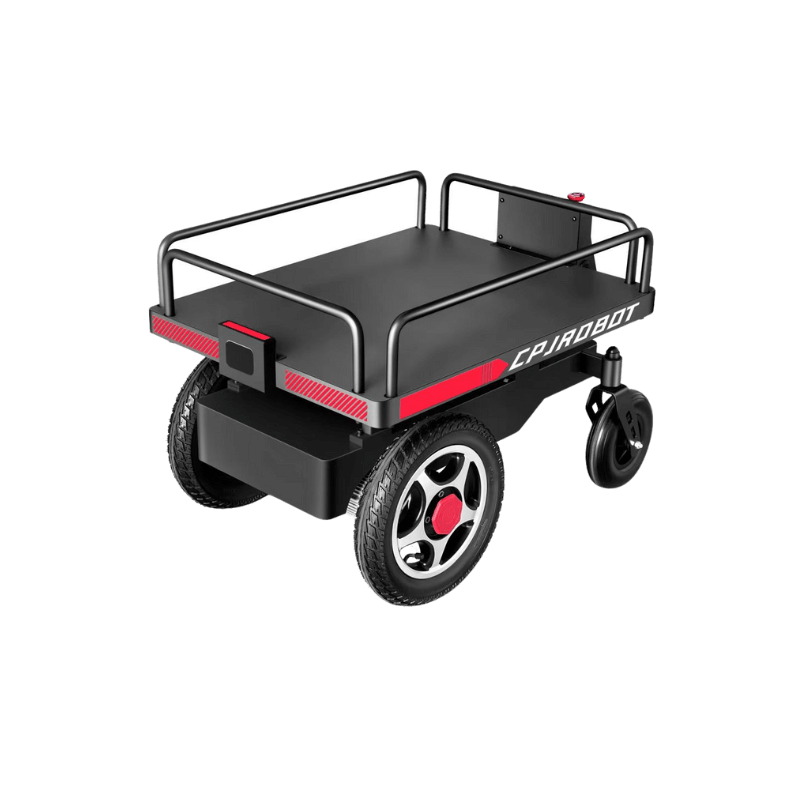

Are you ready to explore the future of robotics and automation? CPJ ROBOT specializes in LiDAR and intelligent robot manufacturing, providing custom solutions for your needs. Reach out today to discover how our cutting-edge technology can revolutionize your operations.